Motion-tracked scriptable simulation agents

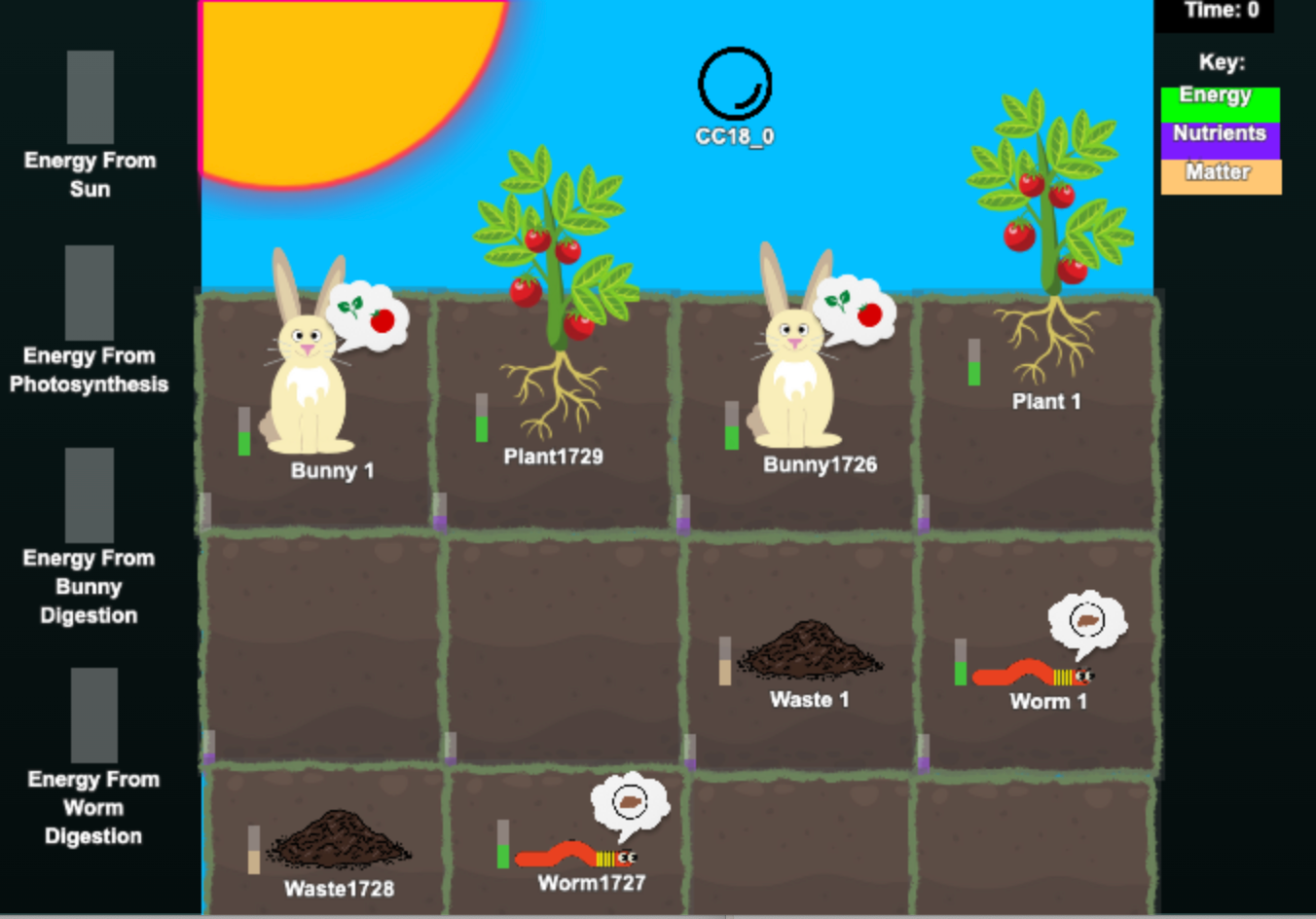

A suite of tools that use motion tracking technology to allow students to control a real time simulation environment populated by student-coded simulation agents.

https://embodiedplay.org/

Client:

Vanderbilt University, Indiana University, UCLA

Funder:

National Science Foundation

Challenge

A research team from Vanderbilt University, Indiana University, and UCLA wanted to build a system that enabled elementary school students to explore various scientific phenomena (systems dynamics models e.g. the working of forces, fish pond ecosystems, complex behaviors of bees) through embodied play, developing an understanding of scientific phenomena by acting as bees, fish, and beavers that responds to student’s physical actions in an immersive interactive simulation environment.

There were a number of challenges in this project:

- How can we track the movements of dozens of students in a relatively small classroom?

- How can we develop a simulation language and authoring enviornment that allowed students to both express their intuitive understanding, and at the same time scaffolded the development of viable simulation agents?

- How can we keep up to 30 students simultaneously enaged?

Solution

Using techniques borrowed from our Take a Stand Holocaust Museum exhibit, we developed a suite of tools that seamlessly integrated three different technologies that enabled students to run around a physical space acting as simulation agents. Students acted as bees/fish/beavers/etc and interacted with other simulation agents (bees, flowers, fish, algae, beavers) projected on a large screen.

In the early iterations of the system, we used camera-based tracking systems to track the position of students, including Microsoft XBox Kinect and Lidar cameras. In later iterations, we used ultra wide band tags (about the size of a key fob) to track the position of students. We also developed a tablet-based system that allowed students to control the position and actions of agents.

The system included:

- A simulation game server that displays a real-time simulation of agents, projected in front of the classroom.

- A motion tracking system that uses ultra wide band tags to control the position of dozens of agents simultaneously.

- A touch-based tablet system that allows multiple student to control agent position and actions.

- A tracking server that can integrate feeds from multiple input devices, including camera-based tracking systems and tablets.

- A student-friendly authoring system that allows students to code their own agents and simulation objects as well as use their own drawings as agents.

- A teacher control dashboard that allows teachers to control the simulation environment and track student progress.

- A research data collection logging system that logs all student interactions with the system.

Results

We are on the fourth generation of the motion tracking and simulation system.

- The system is open source and can be built from readily available technologies.

- Researchers have successfully run these environments in dozens of classrooms and other public spaces (e.g. libraries).

- A number of graduate students are currently developing new simulations to explore various phenomena through custom built environments.

- Researchers have published over 50 papers and presentations documenting this work.

Video

Here’s a video of the GEM-STEP system in action, produced by UCLA REMAP.